The robots.txt file is used for telling the web crawler which files to index and which not. By default, Magento generates robots.txt, you can update and configure it as per your requirements.

If your website is either staging or development and you wanted to stop crawl your website on the web to prevent duplicate site and content issues then you can easily do this from the Magento 2 admin.

Steps to Manage robots.txt from the Magento admin

Step 1: Log in to your Magento 2 Admin.

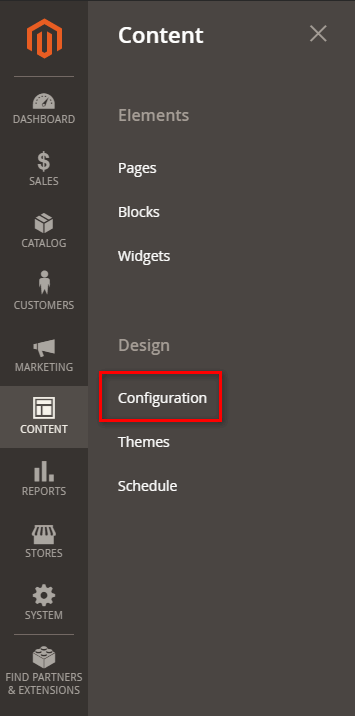

Step 2: Navigate to CONTENT > Configuration

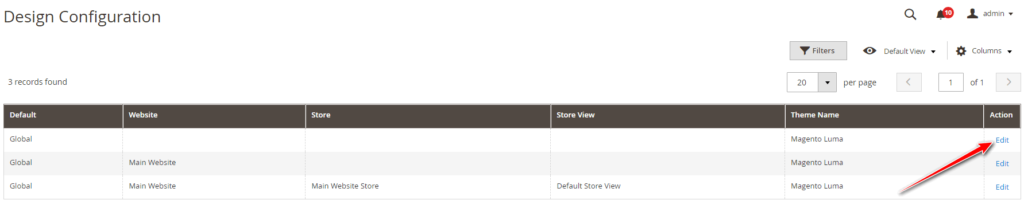

Step 3: Edit Design Configuration

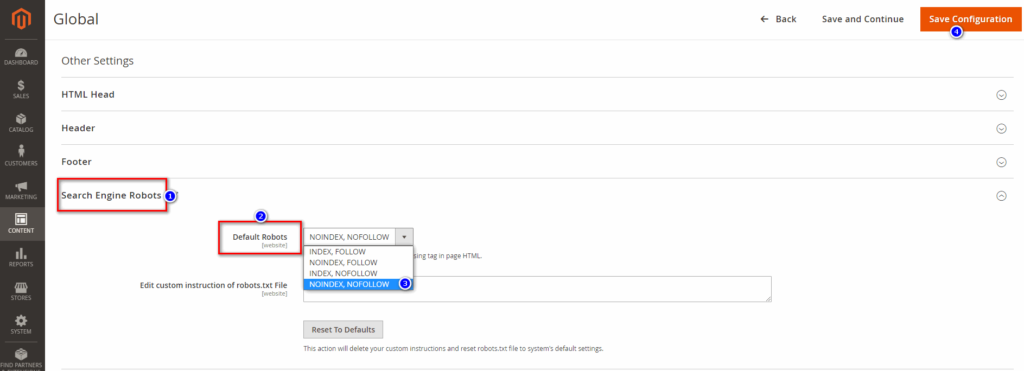

Step 4: Navigate to the “Search Engine Robots” tab.

Step 5: Set NOINDEX, NOFOLLOW to “Default Robots” filed, and Save Configuration.